We’ve been working to convey elements of Quip’s expertise into Slack with the canvas characteristic, whereas additionally sustaining the stand-alone Quip product. Quip’s backend, which powers each Quip and canvas, is written in Python. That is the story of a difficult bug we encountered final July and the teachings we discovered alongside the best way about being cautious with TCP state. We hope that displaying you ways we tackled our bug helps you keep away from — or discover — related bugs sooner or later!

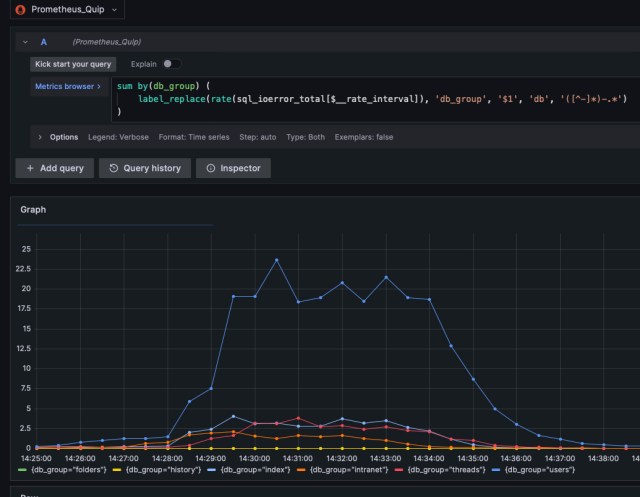

Our journey started with a spike in EOFError errors throughout SQL queries. The errors have been distributed throughout a number of companies and a number of database hosts:

Investigation

The stacktrace confirmed an asyncio.IncompleteReadError, which we translate to EOFError, when studying the response from the database:

File "core/mysql.py", line 299, in __read_result_set

header = await self.__read_packet(timeout=timeout)

File "core/mysql.py", line 532, in __read_packet

header = await self.conn.read_exactly(4, timeout=timeout)

File "core/runtime_asyncio.py", line 1125, in read_exactly

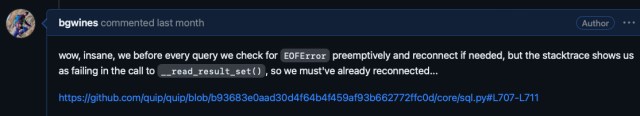

increase EOFError() from NoneRight here’s the related code, which had not been touched just lately, together with some related baffled commentary:

async def _perform_query_locked(...) -> core.sql.End result:

...

if not self.conn.is_connected():

await self.__connect(...)

await self.__send_command(...)

result_set = await self.__read_result_set(...) # <-- EOFError

There are a couple of locations the place we shut our connection to the database, e.g. if we see one in every of a sure set of errors. One preliminary speculation was that one other Python coroutine closed the connection for its personal causes, after we issued the question however earlier than we learn the response. We shortly discarded that idea since connection entry is protected with an in-memory lock, which is acquired at the next degree within the code. Since that may be unimaginable, we reasoned that the opposite facet of the connection will need to have closed on us.

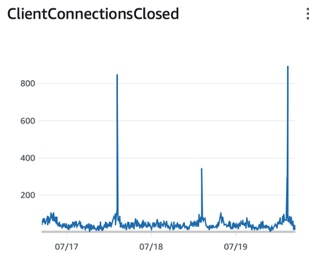

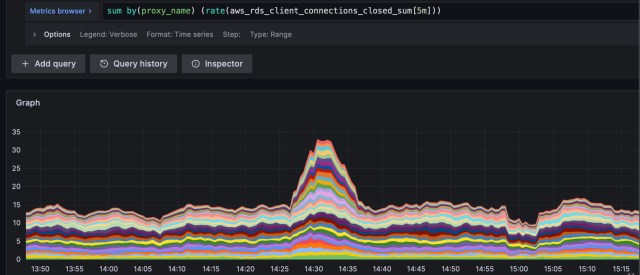

This idea was bolstered once we discovered that our database proxies closed a bunch of connections on the time of the occasion. The closes have been distributed impartial of database host, which was in line with the incident’s floor space. The proxy-close metric, ClientConnectionsClosed, represents closes initiated by both facet, however now we have a separate metric for once we provoke the shut, and there was no improve in exercise from us on the time. The timing right here was extraordinarily suspicious, though it nonetheless didn’t fairly make sense why this could end in such a excessive fee of errors on the response learn since we had simply ensured connection state.

One thing clearly wasn’t including up, so it was time to dig deeper. Suspecting the if not self.conn.is_connected(): line, we checked out our implementation for AsyncioConnection:

class AsyncioConnection(core.runtime.Connection[memoryview]):

def closed(self) -> bool:

return not self.author or self.author.is_closing()

def is_connected(self) -> bool:

return self.relatedEach of those features turned out to be incorrect. First, let’s take a look at closed(). Every Asyncio connection has a StreamReader and a StreamWriter. Our code recommended that the closed state is just a operate of the author, which is managed by the applying server, not the reader. This appeared suspiciously incomplete to us since we anticipated solely the reader to concentrate on whether or not the opposite facet closed the connection. We proved this with a unit check:

async def test_reader_at_eof_vs_writer_is_closing(self):

conn = await self.create_connection()

# Ask the unit check's server to shut

await conn.write(self.encode_command("/stop"))

# Do not learn. Nonetheless open since we have not seen the

# response but

self.assertFalse(conn.author.is_closing())

# Learn, then sleep(0) because it requires one other run

# by means of the scheduler loop so the stream can detect

# the zero learn/eof

response = await conn.read_until(self.io_suffix)

await core.runtime.sleep(0)

self.assertTrue(conn.reader.at_eof()) # passes

self.assertTrue(conn.author.is_closing()) # failsSubsequent, let’s take a look at is_connected(). First, it’s not a operate of closed(), so the 2 have the potential to float. Second, it might return a false constructive: the one place the place we set the occasion variable self.related to false is when the applying server closes the connection, so just like closed() it’s unaware of the reader being within the EOF state.

We determined to log a metric so we might examine self.related to not self.closed() to see precisely how a lot they drift. We discovered six extra bugs, which led to 1 coworker coining the pleasant phrase “while you go choosing up rocks, you would possibly discover issues underneath these rocks,” which we instantly adopted:

is_connected()may be a false destructive. We noticed that the false negatives solely occurred on the companies that keep websocket connections to purchasers. The one place we setself.relatedto true is when the applying server initiates the connection, so it’s by no means set to true when it’s initiated by the shopper.- There’s a test we carry out upon connection lock launch to see if there’s any knowledge on the connection we haven’t learn. It was incorrect to carry out this test in some circumstances on websocket connections because it’s anticipated that the shopper can ship knowledge at any time limit.

- Our HTTP shopper pool might comprise closed purchasers.

- We didn’t accurately deal with exceptions throughout reconnect.

- We discovered one other place the place we needs to be eradicating connections from the pool.

- The Redis-specific cleanup we thought we’d all the time do upon connection shut wasn’t occurring when the shut wasn’t initiated by the applying server.

Decision

At this level we had all of the items of the puzzle and have been in a position to perceive the sequence of occasions:

- The proxy closed database connections on us. After speaking with AWS we discovered that they launched habits the place the proxy closes connections after 24h even when it isn’t idle, so the closes have been tied to our each day launch, which restarts all of our servers.

- We failed to acknowledge the connections have been closed for the reason that shut was not initiated by us, and consequently we didn’t reconnect previous to issuing SQL queries.

- We’d problem a question, then try to learn the response, however the reader could be within the EOF state from the shut, so it could increase

EOFError.

We deployed the repair and noticed the near-total discount in EOFError throughout SQL queries:

As we deployed the repair for the case the place connections are initiated by the shopper, the false negatives disappeared fully, too:

The Asyncio Migration

The Asyncio Migration

The first influence of this bug repair, nonetheless, wasn’t even the improved dealing with of connection closes. The first influence was the unblocking of the Python runtime migration.

The migration undertaking started in 2020 and was from our customized runtime — we have been early adopters of Python 3 — to asyncio, the usual Python IO library launched in 3.4. The undertaking ran easily and was enabled in all environments with only one exception: one service on our largest single-tenant cluster, for the reason that buyer would sporadically expertise timeouts when exporting spreadsheets to PDF. The difficulty solely occurred at occasions of peak load and was tough to breed, so the undertaking stalled.

This PDF export handler makes an RPC request to a different service that handles the PDF era itself. That request goes over an current connection from a connection pool, if one exists. If the RPC service is overloaded, it might shut that connection, and in that case we’d fail to acknowledge that. The handler would hearth off the RPC request, however the different facet wouldn’t be listening, in order that request would cling on write and ultimately trip for the reason that buffer would by no means get drained by the RPC service. For small exports we count on it could have raised an EOFError instantly as an alternative of hanging because it wouldn’t utterly fill the buffer.

After launching the connection state fixes, we re-enabled asyncio on the ultimate service, and confirmed that the errors didn’t recur. The asyncio undertaking resumed, entered a really satisfying section of deleting code in our customized runtime scheduler, and concluded shortly after.

Conclusion

That is difficult to get proper! As we see from https://man7.org/linux/man-pages/man3/shutdown.3p.html a socket will be in a half-shutdown state. We had anticipated from the documentation that StreamWriter.is_closing() would embody that half-shutdown state, however we must always have been extra cautious:

Return True if the stream is closed or within the strategy of being closed.

In reality, each the default event loop and uvloop comprise the identical habits as we did, the place the closing state is just set upon client-initiated shut. This explains why the unit check talked about earlier than failed each with uvloop enabled and disabled and why it was inadequate to rely solely on StreamWriter.is_closing().

When the opposite facet of a TCP connection sends FIN, we enter the CLOSE WAIT state. However in that state we are able to nonetheless try to learn for so long as we like. We’ll solely exit that state once we inform the OS to shut or shut down the socket.

Above all, my private takeaway is to all the time decide up rocks[1]. On this case it might have been tempting to easily name it a day after including EOFError to the checklist of exceptions upon which we reissue the question to a failover database. However with low-level code, it’s normally value the additional effort and time to dig a bit deeper if one thing simply doesn’t fairly appear proper. Even when issues are largely wonderful on the floor, bugs down there can have far-reaching results.

Particular because of Ross Cohen for his arduous work and camaraderie by means of this rollercoaster of a bug. Couldn’t ask for a greater buddy on this one!

When you loved this put up, you may also like working right here. Try our careers page!

[1] You would possibly discover issues underneath these rocks!