- We’re explaining the end-to-end techniques the Fb app leverages to ship related content material to folks.

- Study our video-unification efforts which have simplified our product expertise and infrastructure, in-depth particulars round cellular supply, and new options we’re engaged on in our video-content supply stack.

The top-to-end supply of extremely related, customized, well timed, and responsive content material comes with complicated challenges. At Fb’s scale, the techniques constructed to assist and overcome these challenges require in depth trade-off analyses, targeted optimizations, and structure constructed to permit our engineers to push for a similar person and enterprise outcomes.

Video unification on Fb

It might be exhausting to speak about Fb’s video supply with out mentioning our two years of video unification efforts. Most of the capabilities and applied sciences we’ll reference under wouldn’t have been attainable with out the efforts taken to streamline and simplify Fb video merchandise and technical stacks.

In its easiest kind, we’ve got three techniques to assist Fb video supply: rating, server, and cellular:

Rating (RecSys)

Recommends content material that fulfills folks’s pursuits (i.e., short-term, long-term, and real-time) whereas additionally permitting for novel content material and discovery of content material that’s outdoors the particular person’ historic engagement. The structure helps flexibility for varied optimization features and worth modeling, and it builds on supply techniques that enable for tight latency budgets, fast modeling deployments, and bias in the direction of recent content material. (We classify freshness as how way back rating generated this video candidate. We typically take into account more energizing content material to be higher, because it operates on more moderen and related alerts.

Server (WWW)

Brokers between cellular/internet and RecSys, which serves because the central enterprise logic that powers all of Fb video’s function units and consumes the suitable content material advice via RecSys to the cellular purchasers. It controls key supply traits similar to content material pagination, deduplication, and rating sign assortment. It additionally manages key techniques tradeoffs similar to capability via caching and throttling.

Cell – Fb for Android (FB4A) and Fb for iOS (FBiOS)

Fb’s cellular apps are extremely optimized for person expertise past the pixels. Cell is constructed with frameworks, similar to client-side rating (CSR), which permits for supply of the content material that’s most optimum to folks at precisely the purpose of consumption, without having a spherical journey to the server at instances.

Why unify?

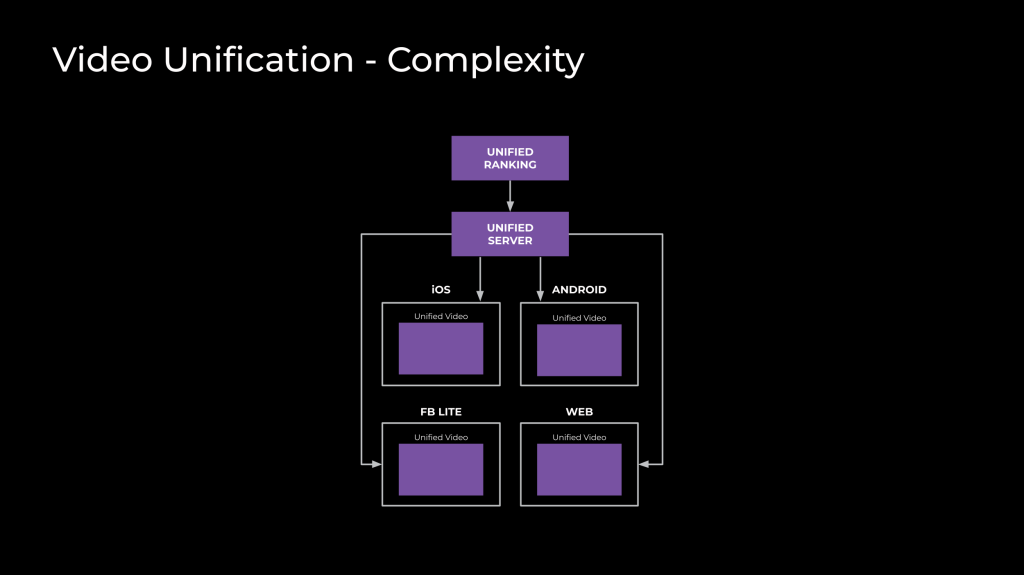

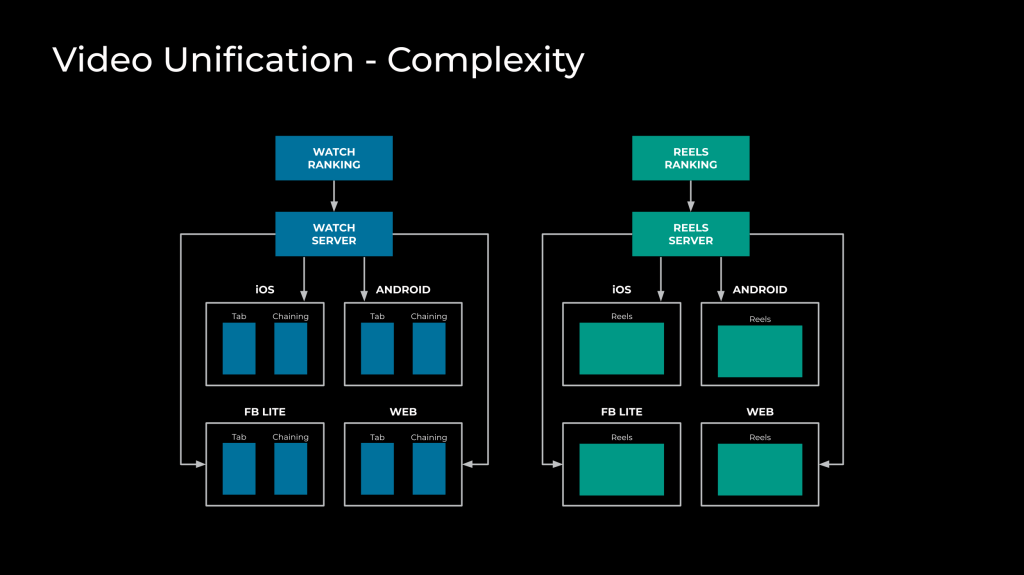

Let’s dive into our video unification efforts. Beforehand, we had varied person experiences, cellular consumer layers, server layers, and rating layers for Watch and Reels. Previously couple of years, we’ve got been consolidating the app’s video experiences and infrastructure right into a single entity.

The reason being easy. Sustaining a number of video services and products results in a fragmented person and developer expertise. Fragmentation results in slower developer instances, sophisticated and inconsistent person experiences, and fewer optimistic app suggestions. Fb Watch and Reels on Fb —two related merchandise—had been functioning fairly individually, which meant we couldn’t share enhancements between them, resulting in a worse expertise throughout the board.

This separation additionally created quite a lot of overhead for creators. Beforehand, if creators wished distribution to a sure floor, they would want to create two varieties of content material, similar to a Reel for immersive Reels surfaces and a VOD for Watch tab. For advertisers, this meant creating totally different advertisements for various advert codecs.

Cell and server technical stack unification

Step one in unification was unifying our two consumer and server knowledge fashions and two architectures into one, with no adjustments to the person interface (UI). The complexity right here was immense, and this technical stack unification took a yr throughout the server, iOS, and Android, however was a vital step in paving the best way for additional steps.

A number of variables added to the complexity, together with:

- Billions of customers for the product. Any small, unintentional shift in logging, UI, or efficiency would instantly be seen in prime line metrics.

- Tens of 1000’s of traces of code per layer throughout Android, iOS, and the server.

- Merging Reels and Watch whereas conserving the very best of each techniques took quite a lot of auditing and debugging. (We wanted to audit a whole bunch of options and 1000’s of traces of code to make sure that we preserved all key experiences).

- The interactions between layers additionally wanted to be maintained whereas the code beneath them was shifting. Logging performed a key function in making certain this.

- Product engineers continued work on each the outdated Reels and Watch techniques, enhancing the product expertise for customers and enhancing key video metrics for the Fb app. This created a “shifting objective submit” impact for the brand new unified system, since we needed to match these new launches. We needed to transfer shortly and select the suitable “cutoff” level to maneuver all of the video engineers to work on the brand new stack as early as attainable.

- If we transferred the engineers too early, roughly 50 product engineers wouldn’t have the ability to hit their objectives, whereas additionally inflicting churn within the core infrastructure.

- If we transferred them too late, much more work can be required on the brand new, unified infrastructure to port outdated options.

- Upkeep of logging for core metrics for the brand new stack. Logging is extraordinarily delicate and carried out in several methods throughout surfaces. We needed to typically re-implement logging in a brand new solution to serve each merchandise. We additionally had to make sure we maintained a whole bunch of key logging parameters.

- We needed to do all of this whereas sustaining engagement and efficiency metrics to make sure the brand new structure met our efficiency bar.

Migrating Watch customers to a Reels floor

The following step was shifting all our VOD feed-chaining experiences to make use of the immersive Reels UI. For the reason that immersive Reels UI was optimized for viewing short-form video, whereas VOD feed-chaining UI was optimized for viewing long-form video, it took many product iterations to make sure that the unified floor might serve all our customers’ wants with none compromises. We ran a whole bunch of exams to establish and polish probably the most optimum video function set. This mission took one other yr to finish.

Unifying rating throughout Reels and Watch

The following step, which shipped in August of 2024, was unifying our rating layers. This needed to be accomplished after the earlier layers, as a result of rating depends on the alerts derived from the UI throughout surfaces being the identical. For instance, the Like button is on prime of the vertical sidebar and fairly distinguished on the Reels UI. However on the Watch UI, it’s on the underside on the far left. They’re the identical sign however have totally different contexts, and if rating handled them equally you’d see a degradation in video suggestions.

Along with UI unification, one other important problem is constructing a rating system that may suggest a combined stock of Reels and VOD content material whereas catering to each short-form video-heavy and long-form video-heavy customers. The rating crew has made large progress on this regard, beginning with the unification of our knowledge, infrastructure, and algorithmic foundations throughout the Reels and Watch stacks. They adopted with the creation of a unified content material pool that features each short-form and long-form movies, enabling better content material liquidity. The crew then optimized the advice machine studying (ML) fashions to floor probably the most related content material with out video size bias, making certain a seamless transition for customers with totally different product affinities (e.g., Reels heavy versus Watch heavy) to a unified content-recommendation expertise.

The unified video tab

The final step was delivery the brand new video tab. This tab makes use of a Reels immersive UI, with unified rating and product infrastructure throughout all layers to ship suggestions starting from Reels, long-form VOD, and dwell movies. It permits us to ship the very best of all worlds from a UI, efficiency, and suggestions perspective.

With video unification almost accomplished, we’re capable of accomplish a lot deeper integrations and sophisticated options finish to finish throughout the stack.

Video unification precursor

Earlier than any formal video unification occurred throughout the Fb app, we made a smaller effort inside the video group to modernize the Watch UI. While you faucet on a video, the Watch Tab will open a brand new video-feed modal display screen. This results in surfaces inside surfaces that may be a complicated expertise. Watch Tab can also be nearer to the Information Feed UI, which doesn’t match trendy immersive video merchandise within the trade.

This mission labored to make the Watch Tab UI immersive and trendy, whereas additionally flattening the feeds inside feeds right into a single feed. The problem was that we had not consolidated our infrastructure layers throughout cellular, server, and rating. This led to slowdowns when making an attempt to implement trendy advice options. We additionally realized too late that rating would play a key function on this mission, and we made rating adjustments late within the mission life cycle.

These key learnings allowed the video group to take the suitable steps and order of operations listed above. With out the learnings from this mission, we would not have seen a profitable video unification consequence.

How Fb’s video supply system works

When delivering content material to folks on Fb we function from 5 key rules:

- Prioritize recent content material.

- Let rating determine the order of content material.

- Solely vend content material (shifting it from reminiscence to the UI layer) when wanted and vend as little as attainable.

- Guarantee fetching conduct is deterministic.

- Give folks content material when there’s a clear sign they need it.

The lifecycle of a video feed community request

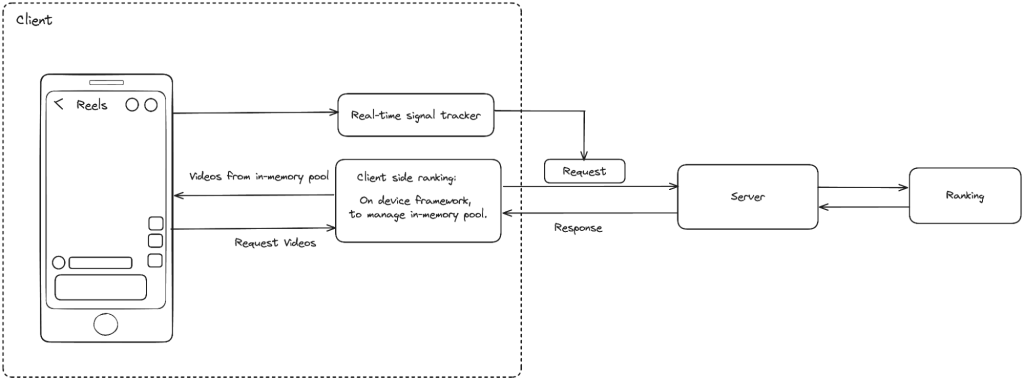

The cellular consumer sends a request

The cellular consumer typically has just a few mechanisms in place that may set off a community request. Every request kind has its personal set off. A prefetch request (one issued earlier than the floor is seen) is triggered a short while after app startup. Prefetch is obtainable just for our tab floor.

A head load (the preliminary community request) is triggered when the person navigates to the floor. A tail load request ( which incorporates all the next requests) is triggered each time the person scrolls, with some caveats.

A prefetch and a head load can each be in flight directly. We’ll vend content material for whichever one returns first. Or in the event that they each take too lengthy, we’ll vend cache content material.

A tail load will likely be tried each time the person scrolls, although we’ll difficulty a request provided that the in-memory pool (the reminiscence retailer for video tales which are prepared for viewing) has three or fewer video tales from the community. So, if we’ve got 4 tales from the community, we received’t difficulty the request. If we’ve got 4 tales from the cache, we’ll difficulty the community request. Alongside the tail load request, we’ll ship person alerts to rating to assist generate good video candidates for that request.

The server receives a request

When a consumer request arrives on the server, it passes via a number of key layers. Because it’s a GraphQL request, the primary of those layers is the GraphQL framework and our GraphQL schema definition of the video knowledge mannequin. Subsequent is the video supply stack, which is a generalized structure able to serving video for any product expertise in Fb. This structure has flexibility in supporting varied backing-data sources: feeds, databases similar to TAO, caches, or different backend techniques. So, whether or not you’re a profile’s video tab, searching an inventory of movies that use a sure soundtrack or hashtag, or visiting the brand new video tab, the video supply stack serves all of those.

For the video tab, the following step is the Feed stack. At Fb, we’ve got a lot of feeds, so we’ve constructed a typical infrastructure to construct, outline, and configure varied varieties of feeds: the primary Information Feed, Market, Teams—you title it. The video tab’s feed implementation then calls into the rating backend service.

Throughout these layers, the server handles a major quantity of enterprise logic. This contains throttling requests in response to tight data-center capability or disaster-recovery situations, caching outcomes from the rating backend, gathering model-input knowledge from varied knowledge shops to ship to rating, and latency tracing to log the efficiency of our system, in addition to piping via client-input parameters that should be handed to rating.

Rating receives a request

Basically our rating stack is a graph-based execution service that orchestrates all the serving workflow to ultimately generate plenty of video tales and return them to the online server.

The advice-serving workflow usually contains a number of phases similar to candidate retrieval from a number of varieties of retrieval ML fashions, varied filtering, point-wise rating, list-wise rating, and heuristic-diversity management. A candidate should undergo all these phases to outlive and be delivered.

Moreover, our rating stack additionally offers extra superior options to maximise worth for folks. For instance, we use root video to contextualize the highest video tales to attain a pleasing person expertise. We even have a framework known as elastic rating that permits dynamic variants of the rating queries to run based mostly on system load and capability availability.

The server receives a response from rating

On the most simple degree, rating offers the server an inventory of video IDs and rating metadata for every one. The server then must load the video entities from our TAO database and execute privateness checks to make sure that the viewer can see these movies. The logic of those privateness checks is outlined on the server, so rating can’t execute these privateness checks, though rating has some heuristics to scale back the prevalence of recommending movies that the viewer received’t have the ability to see anyway. Then the video is handed again to the GraphQL framework, which materializes the fields that the consumer’s question initially requested for. These two steps, privateness checking and materialization, collectively and in combination represent a significant portion of worldwide CPU utilization in our knowledge facilities, so optimizing here’s a important focus space to alleviate our knowledge middle demand and energy consumption.

The cellular consumer receives a response from the server

When the consumer receives the community response, the video tales are added to the in-memory pool. We prioritize the tales based mostly on the server type key, which is supplied by the rating layer, in addition to on whether or not it has been considered by the particular person. On this means, we defer content material prioritization to the rating layer, which has way more complicated mechanisms for content material advice in comparison with the consumer.

By deferring to the server type key on the consumer, we accomplish our key rules of deferring to rating for content material prioritization in addition to prioritizing recent content material.

The steadiness for cellular purchasers is between content material freshness and efficiency/effectivity. If we wait too lengthy for the community request to finish, folks will go away the floor. If we immediately vend cache each time somebody involves the floor, the primary items of content material they see could also be stale and thus not related or attention-grabbing.

If we fetch too usually, then our capability prices will enhance. If we don’t fetch sufficient, related community content material received’t be accessible, and we’ll serve stale content material.

When tales are added from the in-memory pool, we additionally carry out media prefetching; this ensures that swiping from one video to a different is a seamless expertise.

That is the fixed balancing act we’ve got to play on behalf of our cellular purchasers within the content material supply area.

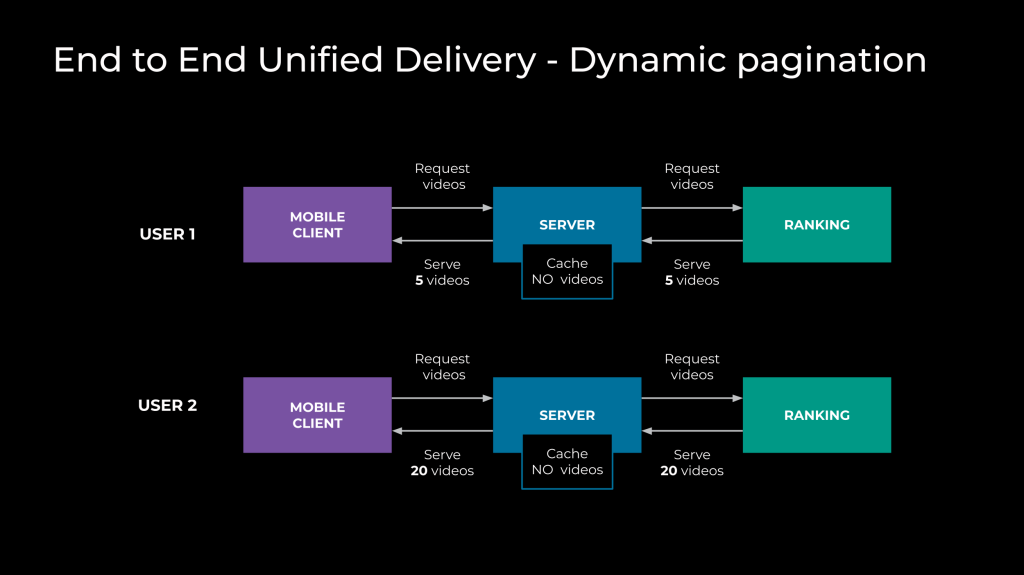

Dynamic pagination, a brand new method to video feed supply

In a typical supply state of affairs, everybody on Fb receives a web page of movies with a set measurement from rating to consumer. Nonetheless, this method could be limiting for a big person base the place we have to optimize capability prices on demand. Person traits range broadly, starting from those that by no means swipe down on a video to those that eat many movies in a single session. Somebody may very well be utterly new to our video expertise or somebody who visits the Fb app a number of instances a day. To accommodate each ends of the user-consumption spectrum, we developed a brand new and dynamic pagination framework.

Below this method, the rating layer has full management over the video web page measurement that ought to be ranked for a given particular person and served. The server’s function is to offer a guardrail for deterministic web page measurement contracts between the server and consumer gadget. In abstract, the contract between rating and server is dynamic web page measurement, whereas the contract between server and consumer is fastened web page measurement, with the smallest attainable worth. This setup helps be certain that if the amount of ranked movies is just too massive, the particular person’s gadget doesn’t find yourself receiving all of them. On the identical time, it simplifies client-delivery infrastructure by making certain there’s deterministic web page measurement conduct between the consumer and server.

With the above setup, rating can present personalization to various levels. If rating is assured in its understanding of somebody’s consumption wants, it will possibly output a bigger set of ranked content material. Conversely, if rating is much less assured, it will possibly output a smaller set of ranked content material. By incorporating this degree of personalization, we are able to rigorously curate content material for people who find themselves comparatively new to the platform whereas offering a bigger advice batch for normal customers. This method permits us to preserve capability and serve the very best content material to our extraordinarily massive person base.

Actual-time rating

Actual-time rating adjusts video content material rating based mostly on person interactions and engagement alerts, delivering extra related content material as folks work together with the platform.

Amassing real-time alerts similar to video view time, likes, and different interactions utilizing asynchronous knowledge pipelines allows correct rating, or it allows utilizing synchronous knowledge pipelines similar to piggybacking a batch of those alerts into the following tail load request. This course of depends on system latency and sign completeness. It’s necessary to notice that if the snapshot of real-time alerts between two distinct rating requests is analogous, there’s little to no adjustment that rating can carry out to react to the particular person’s present curiosity.

Rating movies in actual time ensures distinguished show of related and interesting content material, whereas eliminating duplicates for content material diversification in addition to new subject exploration. This method enhances person engagement by offering a personalised and responsive viewing expertise, adapting to folks’s preferences and behaviors in actual time throughout app classes. Consider responsiveness as how effectively and persistently our end-to-end infrastructure delivers recent content material.