At Slack, buyer love is our first precedence and accessibility is a core tenet of buyer belief. We have now our personal Slack Accessibility Requirements that product groups comply with to ensure their options are compliant with Net Content material Accessibility Pointers (WCAG). Our devoted accessibility staff helps builders in following these tips all through the event course of. We additionally steadily collaborate with exterior guide testers focusing on accessibility.

In 2022, we began to complement Slack’s accessibility technique by establishing automated accessibility checks for desktop to catch a subset of accessibility violations all through the event course of. At Slack, we see automated accessibility testing as a priceless addition to our broader testing technique. This broader technique additionally consists of involving folks with disabilities early within the design course of, conducting design and prototype assessment with these customers, and performing guide testing throughout the entire assistive applied sciences we assist. Automated instruments can overlook nuanced accessibility points that require human judgment, reminiscent of display reader usability. Moreover, these instruments may flag points that don’t align with the product’s particular design issues.

Regardless of that, we nonetheless felt there can be worth in integrating an accessibility testing instrument into our take a look at frameworks as a part of the general, complete testing technique. Ideally, we have been hoping so as to add one other layer of assist by integrating the accessibility validation instantly into our present frameworks so take a look at homeowners might simply add checks, or higher but, not have to consider including checks in any respect.

Exploration and Limitations

Sudden Complexities: Axe, Jest, and React Testing Library (RTL)

We selected to work with Axe, a preferred and simply configurable accessibility testing instrument, for its intensive capabilities and compatibility with our present end-to-end (E2E) take a look at frameworks. Axe checks towards all kinds of accessibility tips, most of which correspond to particular success standards from WCAG, and it does so in a method that minimizes false positives.

Initially we explored the potential for embedding Axe accessibility checks instantly into our React Testing Library (RTL) framework. By wrapping RTL’s render technique with a customized render perform that included the Axe examine, we might take away loads of friction from the developer workflow. Nevertheless, we instantly encountered a difficulty associated to the way in which we’ve custom-made our Jest arrange at Slack. Working accessibility checks by a separate Jest configuration labored, however would require builders to put in writing checks particularly for accessibility, which we needed to keep away from. Remodeling our customized Jest setup was deemed too tough and never definitely worth the time and useful resource funding, so we pivoted to concentrate on our Playwright framework.

The Finest Answer for Axe Checks: Playwright

With Jest dominated out as a candidate for Axe, we turned to Playwright, the E2E take a look at framework utilized at Slack. Playwright helps accessibility testing with Axe by the @axe-core/playwright package deal. Axe Core offers most of what you’ll must filter and customise accessibility checks. It offers an exclusion technique proper out of the field, to stop sure guidelines and selectors from being analyzed. It additionally comes with a set of accessibility tags to additional specify the kind of evaluation to conduct (‘wcag2a‘, ‘wcag2aa‘, and so forth.).

Our preliminary aim was to “bake” accessibility checks instantly into Playwright’s interplay strategies, reminiscent of clicks and navigation, to mechanically run Axe with out requiring take a look at authors to explicitly name it.

In working in the direction of that aim, we discovered that the primary problem with this strategy stems from Playwright’s Locator object. The Locator object is designed to simplify interplay with web page parts by managing auto-waiting, loading, and making certain the ingredient is totally interactable earlier than any motion is carried out. This automated conduct is integral to Playwright’s potential to keep up steady checks, nevertheless it sophisticated our makes an attempt to embed Axe into the framework.

Accessibility checks ought to run when your complete web page or key parts are totally rendered, however Playwright’s Locator solely ensures the readiness of particular person parts, not the general web page. Modifying the Locator might result in unreliable audits as a result of accessibility points would possibly go undetected if checks have been run on the unsuitable time.

An alternative choice, utilizing deprecated strategies like waitForElement to manage when accessibility checks are triggered, was additionally problematic. These older strategies are much less optimized, inflicting efficiency degradation, potential duplication of errors, and conflicts with the abstraction mannequin that Playwright follows.

So whereas embedding Axe checks into Playwright’s core interplay strategies appeared ideally suited, the complexity of Playwright’s inner mechanisms required us to discover some additional options.

Customizations and Workarounds

To avoid the roadblocks we encountered with embedding accessibility checks into the frameworks, we determined to make some concessions whereas nonetheless prioritizing a simplified developer workflow. We continued to concentrate on Playwright as a result of it provided extra flexibility in how we might selectively conceal or apply accessibility checks, permitting us to extra simply handle when and the place these checks have been run. Moreover, Axe Core got here with some nice customization options, reminiscent of filtering guidelines and utilizing particular accessibility tags.

Utilizing the @axe-core/playwright package deal, we are able to describe the circulate of our accessibility examine:

- Playwright take a look at lands on a web page/view

- Axe analyzes the web page

- Pre-defined exclusions are filtered out

- Violations and artifacts are saved to a file

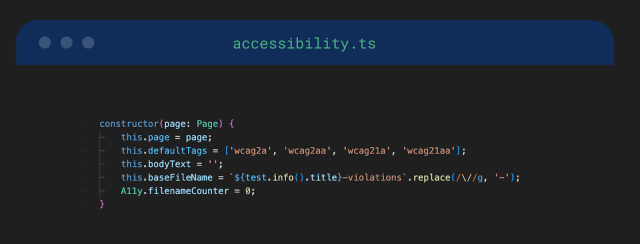

First, we arrange our foremost perform, runAxeAndSaveViolations, and customised the scope utilizing what the AxeBuilder class offers.

- We needed to examine for compliance with WCAG 2.1, Ranges A and AA

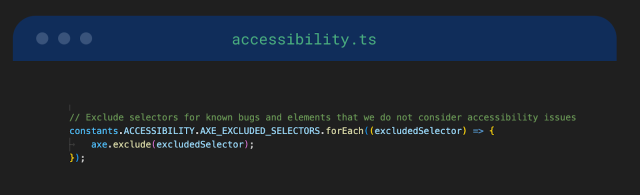

- We created an inventory of selectors to exclude from our violations report. These fell into two foremost classes:

-

- Identified accessibility points – points that we’re conscious of and have already been ticketed

- Guidelines that don’t apply – Axe guidelines exterior of the scope of how Slack is designed for accessibility

-

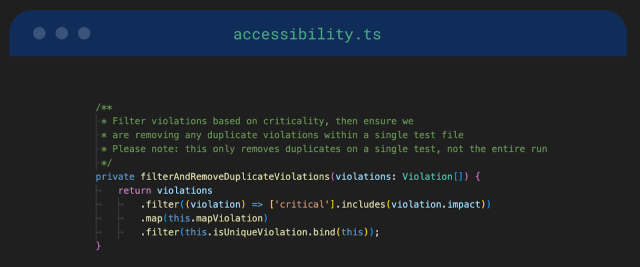

- We additionally needed to filter for duplication and severity stage. We created strategies to examine for the distinctiveness of every violation and filter out duplication. We selected to report solely the violations deemed

Crucialin keeping with the WCAG.Critical,Average, andDelicateare different attainable severity ranges that we might add sooner or later.

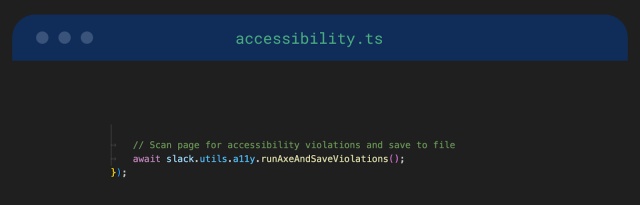

- We took benefit of the Playwright fixture mannequin. Fixtures are Playwright’s method to construct up and teardown state exterior of the take a look at itself. Inside our framework we’ve created a customized fixture known as

slackwhich offers entry to all of our API calls, UI views, workflows and utilities associated to Slack. Utilizing this fixture, we are able to entry all of those assets instantly in our checks with out having to undergo the setup course of each time. - We moved our accessibility helper to be a part of the pre-existing

slackfixture. This allowed us to name it instantly within the take a look at spec, minimizing a number of the overhead for our take a look at authors.

- We additionally took benefit of the power to customise Playwright’s

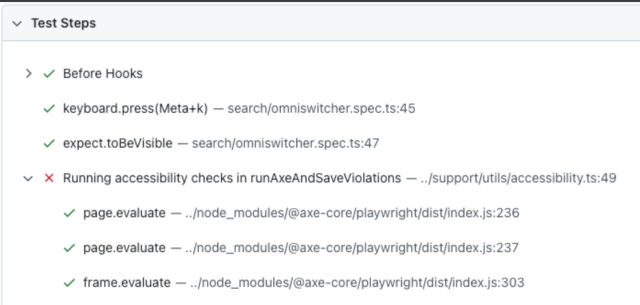

take a look at.step. We added the customized label “Working accessibility checks inrunAxeAndSaveViolations” to make it simpler to detect the place an accessibility violation has occurred:

Placement of Accessibility Checks in Finish to Finish Exams

To kick the venture off, we arrange a take a look at suite that mirrored our suite for testing important performance at Slack. We renamed the suite to make it clear it was for accessibility checks, and we set it to run as non-blocking. This meant builders would see the take a look at outcomes, however a failure or violation wouldn’t stop them from merging their code to manufacturing. This preliminary suite encompassed 91 checks in whole.

Strategically, we thought-about the position of accessibility checks inside these important circulate checks. Typically, we aimed so as to add an accessibility examine for every new view, web page, or circulate coated within the take a look at. Usually, this meant putting a examine instantly after a button click on, for instance, or a hyperlink that results in navigation. In different situations, our accessibility examine wanted to be positioned after signing in as a second person or after a redirect.

It was necessary to verify the identical view wasn’t being analyzed twice in a single take a look at, or doubtlessly twice throughout a number of checks with the identical UI circulate. Duplication like this is able to end in pointless error messages and saved artifacts, and decelerate our checks. We have been additionally cautious to position our Axe calls solely after the web page or view had totally loaded and all content material had rendered.

With this strategy, we wanted to be deeply aware of the applying and the context of every take a look at case.

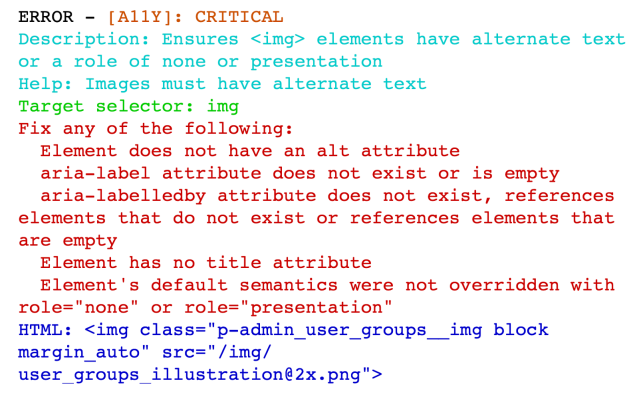

Violations Reporting

We spent a while iterating on our accessibility violations report. Initially, we created a easy textual content file to save lots of the outcomes of an area run, storing it in an artifacts folder. A couple of builders gave us early suggestions and requested screenshots of the pages the place accessibility violations occurred. To realize this, we built-in Playwright’s screenshot performance and started saving these screenshots alongside our textual content report in the identical artifact folder.

To make our stories extra coherent and readable, we leveraged the Playwright HTML Reporter. This instrument not solely aggregates take a look at outcomes but additionally permits us to connect artifacts reminiscent of screenshots and violation stories to the HTML output. By configuring the HTML reporter, we have been capable of show all of our accessibility artifacts, together with screenshots and detailed violation stories, in a single take a look at report.

Lastly, we needed our violation error message to be useful and simple to know, so we wrote some code to drag out key items of data from the violation. We additionally custom-made how the violations have been displayed within the stories and on the console, by parsing and condensing the error message.

Atmosphere Setup and Working Exams

As soon as we had built-in our Axe checks and arrange our take a look at suite, we wanted to find out how and when builders ought to run them. To streamline the method for builders, we launched an atmosphere flag, A11Y_ENABLE, to manage the activation of accessibility checks inside our framework. By default, we set the flag to false, stopping pointless runs.

This setup allowed us to supply builders the next choices:

- On-Demand Testing: Builders can manually allow the flag when they should run accessibility checks regionally on their department.

- Scheduled Runs: Builders can configure periodic runs throughout off-peak hours. We have now a day by day regression run configured in Buildkite to pipe accessibility take a look at run outcomes right into a Slack alert channel on a day by day cadence.

- CI Integration: Optionally, the flag might be enabled in steady integration pipelines for thorough testing earlier than merging vital adjustments.

Triage and Possession

Possession of particular person checks and take a look at suites is commonly a scorching matter with regards to sustaining checks. As soon as we had added Axe calls to the important flows in our Playwright E2E checks, we wanted to determine who can be accountable for triaging accessibility points found by way of our automation and who would personal the take a look at upkeep for present checks.

At Slack, we allow builders to personal take a look at creation and upkeep for his or her checks. To assist builders to higher perceive the framework adjustments and new accessibility automation, we created documentation and partnered with the inner Slack accessibility staff to give you a complete triage course of that will match into their present workflow for triaging accessibility points.

The interior accessibility staff at Slack had already established a course of for triaging and labeling incoming accessibility points, utilizing the inner Slack Accessibility Requirements as a tenet. To boost the method, we created a brand new label for “automated accessibility” so we might observe the problems found by way of our automation.

To make cataloging these points simpler, we arrange a Jira workflow in our alerts channel that will spin up a Jira ticket with a pre-populated template. The ticket is created by way of the workflow and mechanically labeled with “automated accessibility” and positioned in a Jira Epic for triaging.

Conducting Audits

We carry out common audits of our accessibility Playwright calls to examine for duplication of Axe calls, and guarantee correct protection of accessibility checks throughout checks and take a look at suites.

We developed a script and an atmosphere flag particularly to facilitate the auditing course of. Audits might be carried out both by sandbox take a look at runs (ideally suited for suite-wide audits) or regionally (for particular checks or subsets). When performing an audit, operating the script permits us to take a screenshot of each web page that performs an Axe name. The screenshots are then saved to a folder and might be simply in comparison with spot duplicates.

This course of is extra guide than we like, and we’re wanting into methods to eradicate this step, doubtlessly leaning on AI help to carry out the audit for us – or have AI add our accessibility calls to every new web page/view, thereby eliminating the necessity to carry out any sort of audit in any respect.

What’s Subsequent

We plan to proceed partnering with the inner accessibility staff at Slack to design a small blocking take a look at suite. These checks shall be devoted to the flows of core options inside Slack, with a concentrate on keyboard navigation.

We’d additionally wish to discover AI-driven approaches to the post-processing of accessibility take a look at outcomes and look into the choice of getting AI assistants audit our suites to find out the position of our accessibility checks, additional lowering the guide effort for builders.

Closing Ideas

We needed to make some surprising trade-offs on this venture, balancing the sensible limitations of automated testing instruments with the aim of lowering the burden on builders. Whereas we couldn’t combine accessibility checks fully into our frontend frameworks, we made vital strides in the direction of that aim. We simplified the method for builders so as to add accessibility checks, ensured take a look at outcomes have been simple to interpret, offered clear documentation, and streamlined triage by Slack workflows. In the long run, we have been ready so as to add take a look at protection for accessibility within the Slack product, making certain that our clients that require accessibility options have a constant expertise.

Our automated Axe checks have lowered our reliance on guide testing and now praise different important types of testing—like guide testing and value research. In the intervening time, builders must manually add checks, however we’ve laid the groundwork to make the method as simple as attainable with the chance for AI-driven creation of accessibility checks.

Roadblocks like framework complexity or setup difficulties shouldn’t discourage you from pursuing automation as a part of a broader accessibility technique. Even when it’s not possible to cover the automated checks fully behind the scenes of the framework, there are methods to make the work impactful by specializing in the developer expertise. This venture has not solely strengthened our general accessibility testing strategy, it’s additionally strengthened the tradition of accessibility that has at all times been central to Slack. We hope it conjures up others to look extra carefully at how automated accessibility would possibly match into your testing technique, even when it requires navigating just a few technical hurdles alongside the way in which.

Thanks to everybody who spent vital time on the enhancing and revision of this weblog submit – Courtney Anderson-Clark, Lucy Cheng, Miriam Holland and Sergii Gorbachov.

And a large thanks to the Accessibility Workforce at Slack, Chanan Walia, Yura Zenevich, Chris Xu and Hye Jung Choi, in your assist with every thing associated to this venture, together with enhancing this weblog submit!