- The important thing to developer velocity throughout AI lies in minimizing time to first batch (TTFB) for machine studying (ML) engineers.

- AI Lab is a pre-production framework used internally at Meta. It permits us to constantly A/B take a look at frequent ML workflows – enabling proactive enhancements and routinely stopping regressions on TTFB.

- AI Lab prevents TTFB regressions while enabling experimentation to develop enhancements. For instance, in the course of the rollout of the open supply Python Cinder runtime, AI Lab was used to yield a 2x enhance on authentic TTFB enhancements, decreasing TTFB by up to 40%.

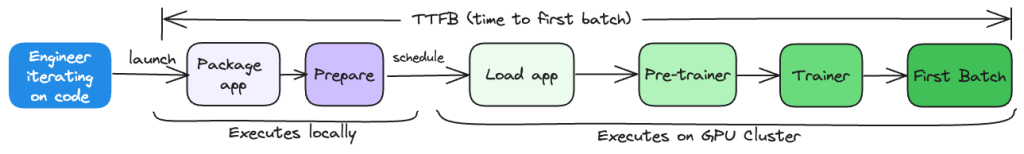

Time to first batch (TTFB), the delay from when a workflow is submitted to the coaching job’s first batch, performs an necessary function in accelerating our machine studying (ML) engineers’ iteration speeds. Primarily, TTFB is the time elapsed from the second you hit the “begin” button in your ML mannequin coaching to the purpose when the primary batch of information enters the mannequin for processing. TTFB influences the overhead for all ML coaching jobs and is actually the second when builders first get a sign on their job.

By minimizing TTFB we’re unblocking our ML engineers, rising the variety of iterations they will do per day, and bettering the general velocity of innovation at Meta.

Supporting TTFB throughout Meta requires a scalable providing to not solely allow proactive enhancements on this invaluable metric, but additionally hold it wholesome autonomously. To this finish we’ve created AI Lab, a pre-production TTFB sign era device which empowers infra house owners to ship new modifications with excessive confidence, decreasing TTFB by up to 40%. This, coupled with computerized prevention of regressions retains ML engineers transferring quick throughout Meta.

Optimizing TTFB helps ML engineers transfer quick

The overhead induced from TTFB is on the essential path for many ML improvement. It’s composed of elements like config validation, function pre-processing, and infra overhead (like queuing for capability). Optimizations to elements of TTFB may even influence all the coaching cycle of some fashions. At Meta’s scale, the metric worth of TTFB typically subtly modifications as builders iterate on their mannequin, launcher, or structure.

To get and hold ML engineers transferring quick, two issues are required:

- Offensively enhance TTFB: We want an intuitive, easy-to-use experimentation framework that enables customers to quantify the influence of their modifications, enabling quick iteration, and influence certification of latest options, empowering infra house owners to ship new modifications with excessive confidence.

- Defensively stop regressions on TTFB: We want steady regression prevention that assessments the most recent modifications in a low-noise surroundings, while offering a solution to monitor, detect, and stop regressions from affecting ML engineers within the first place.

Introducing AI Lab

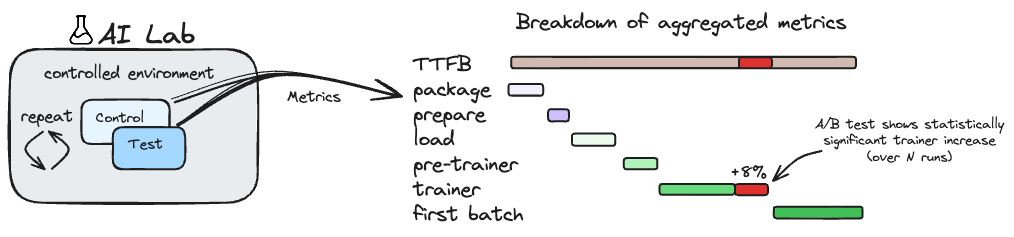

AI Lab is a specialised pre-production framework wherein we constantly execute frequent ML workflows as an A/B take a look at to precisely measure the influence of latest modifications on metrics like TTFB. Constructed on prime of the identical programs as MobileLab, AI Lab routinely defends TTFB by stopping regressions previous to launch and permits offensive TTFB enhancements opportunistically as an experimentation framework.

Constructing AI Lab offered distinctive challenges. As a result of GPU capability is such a valuable useful resource, we had to make sure we had been a internet optimistic to capability utilization throughout Meta. We took care to work with companions on shrunk fashions and easy configurations like some that might run on solely CPUs, however nonetheless stop the regressions that might recurrently tie up GPUs. To this finish, we created an auto-shrinker that goals to make sure assessments run the identical code / configurations as manufacturing; besides eat much less compute assets. It does issues like cut back the variety of coaching iterations and mannequin measurement, even enabling extra deterministic conduct. These assessments typically run in <10 minutes, which is useful for builders iterating on potential TTFB modifications. We additionally wanted a holistic technique to scale to the scale of Meta, one thing we’ll cowl in a later part.

Let’s bounce into an actual instance for a way we are able to leverage a device like AI Lab to cut back TTFB.

Lowering TTFB with the Python Cinder runtime and AI Lab

Meta’s open supply Python Cinder runtime introduced with it as much as a 40% improvement in TTFB due to the aggressive lazy imports. Right here, we see the true utility of a framework like AI Lab and the way it was used to facilitate this sweeping change.

Offensively

We will leverage AI Lab as a substitute of experimenting on actual ML engineers’ workflows which will require days or perhaps weeks of turnaround to validate a efficiency speculation. With AI Lab, in lower than an hour, we’re capable of precisely take a look at and measure the influence of a proposed Cinder model on TTFB throughout a complete set of consultant ML eventualities.

In observe, builders turned this into an iteration loop to check additional optimizations and fine-tune Cinder, yielding a 2x enhance on the unique TTFB enhancements they had been seeing. For instance, initially in profiles with Cinder enabled engineers discovered that as much as 10% of the execution time was spent in a workflow to only fairly print. Seems, the strategy of memoization used brought about a repr() to occur on an underlying information construction, which simply so occurred to be large in typical ML eventualities. As an alternative, they made an object wrapper on this underlying information construction and made memoization comparisons utilizing the object identities as a substitute.

AI Lab verified the development, enabling them to proceed with rolling out the change.

Defensively

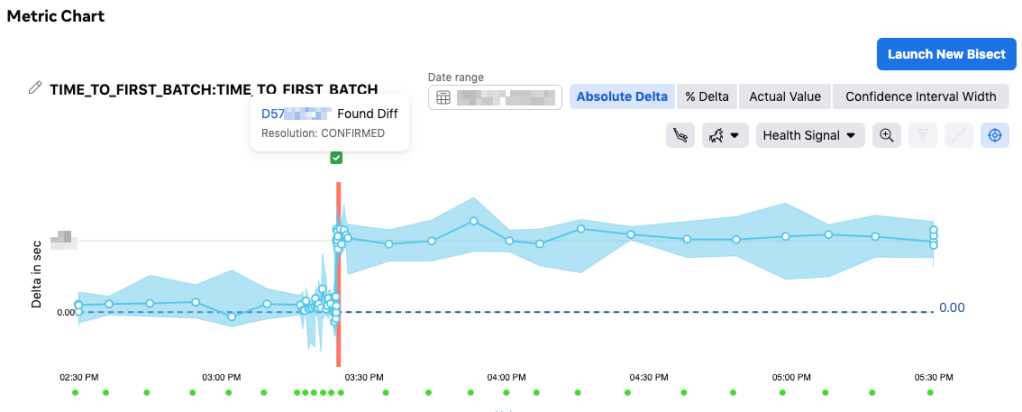

Round when Cinder started rolling out, a regression simply so occurred to happen that was utterly unrelated to the rollout. On this new regression, an engineer added some logging that they believed was being achieved asynchronously. Unbeknownst to them, the decision was really blocking on account of one of many nested purchasers they required being synchronous. AI Lab leveraged Incident Tracker and routinely attributed the regression all the way down to the precise change. The change writer of the regression was notified shortly afterwards, reverting their change earlier than the discharge went out to manufacturing.

Due to AI Lab, the engineers engaged on Cinder by no means needed to fear a couple of TTFB regression occurring in the identical launch they rolled out in, avoiding a possible rollback.

Find out how to obtain prevention at Meta’s scale

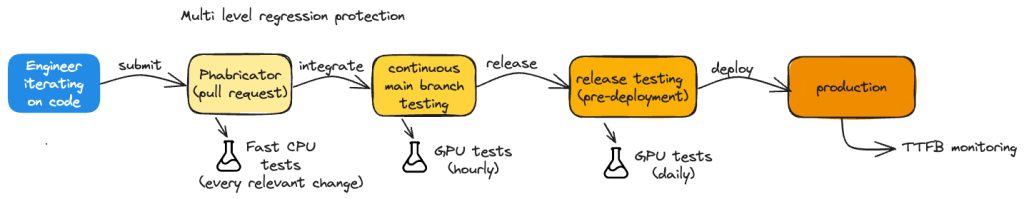

We wish to give correct TTFB alerts as early as doable within the improvement cycle, however it’s infeasible to benchmark all ML eventualities for each change made by each engineer at Meta. As an alternative, much like predictive test selection, we set up a restrict on capability used and got down to discover as many regressions/enhancements as early within the improvement cycle as doable. In observe, this implies:

- O(Code Modifications): Operating related, efficient, and computationally environment friendly (typically CPU-only) AI Lab assessments on potential modifications earlier than they’re even reviewed.

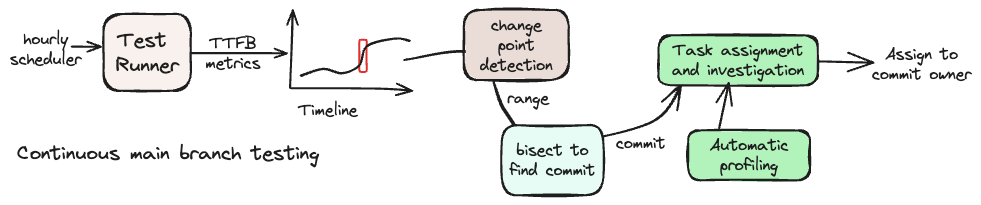

- O(Releases): Operating a extra holistic set of AI Lab assessments previous to launch and performing a bisect-like attribution course of to search out the foundation trigger.

- Attribution on this method is very efficient and environment friendly; it serves as a terrific fallback once we should run extra computationally intensive assessments to discover a sure regression.

Ought to we discover a statistically important change per a t-test, we carry out extra checks earlier than marking it as a regression/enchancment:

- Run affirmation runs to verify we confidently reproduce the anticipated regression/enchancment.

- Guarantee the scale of the regression/enchancment is above a dynamic threshold primarily based on the usual deviation of the take a look at and a tuned receiver operating characteristic. For instance, a accomplice might require <1 false optimistic per week, which units the edge for our assessments to search out as many true positives as doable while staying beneath that.

Inviting trade collaboration

Whereas AI Lab is an internal-only device at Meta, we’d love to listen to from members of the neighborhood who could also be operating related platforms. Artificial sign manufacturing is a boon to each builders and customers. When builders can quickly consider a speculation, and customers can expertise fewer regressions, it accelerates AI innovation throughout the trade. We’d like to collaborate with the trade to discover extra methods we are able to enhance on instruments like AI Lab and optimize extra metrics like TTFB.

Acknowledgements

AI Lab was made doable because of the foundational work of MobileLab. As we goal to scale previous TTFB, we stay up for tackling AI effectivity metrics too with ServiceLab. We’d wish to thank members of the AI Coaching Orchestration workforce for serving to us construct AI Lab and all of our customers for leveraging the product to maintain bettering TTFB.