Meta’s promoting enterprise leverages large-scale machine studying (ML) advice fashions that energy tens of millions of adverts suggestions per second throughout Meta’s household of apps. Sustaining reliability of those ML techniques helps guarantee the very best degree of service and uninterrupted profit supply to our customers and advertisers. To attenuate disruptions and guarantee our ML techniques are intrinsically resilient, now we have constructed a complete set of prediction robustness options that guarantee stability with out compromising efficiency or availability of our ML techniques.

Why is machine studying robustness troublesome?

Fixing for ML prediction stability has many distinctive traits, making it extra advanced than addressing stability challenges for conventional on-line companies:

- ML fashions are stochastic by nature. Prediction uncertainty is inherent, which makes it troublesome to outline, determine, diagnose, reproduce, and debug prediction high quality points.

- Fixed and frequent refreshing of fashions and options. ML fashions and options are constantly up to date to be taught from and mirror individuals’s pursuits, which makes it difficult to find prediction high quality points, include their impression, and rapidly resolve them

- Blurred line between reliability and efficiency. In conventional on-line companies, reliability points are simpler to detect primarily based on service metrics akin to latency and availability. Nonetheless, ML prediction stability implies a constant prediction high quality shift, which is more durable to tell apart. For instance, an “obtainable” ML recommender system that reliably produces inaccurate predictions is definitely “unreliable.”

- Cumulative impact of small distribution shifts over time. As a result of stochastic nature of ML fashions, small regressions in prediction high quality are exhausting to tell apart from the anticipated natural traffic-pattern adjustments. Nonetheless, if undetected, such small prediction regressions might have a big cumulative damaging impression over time.

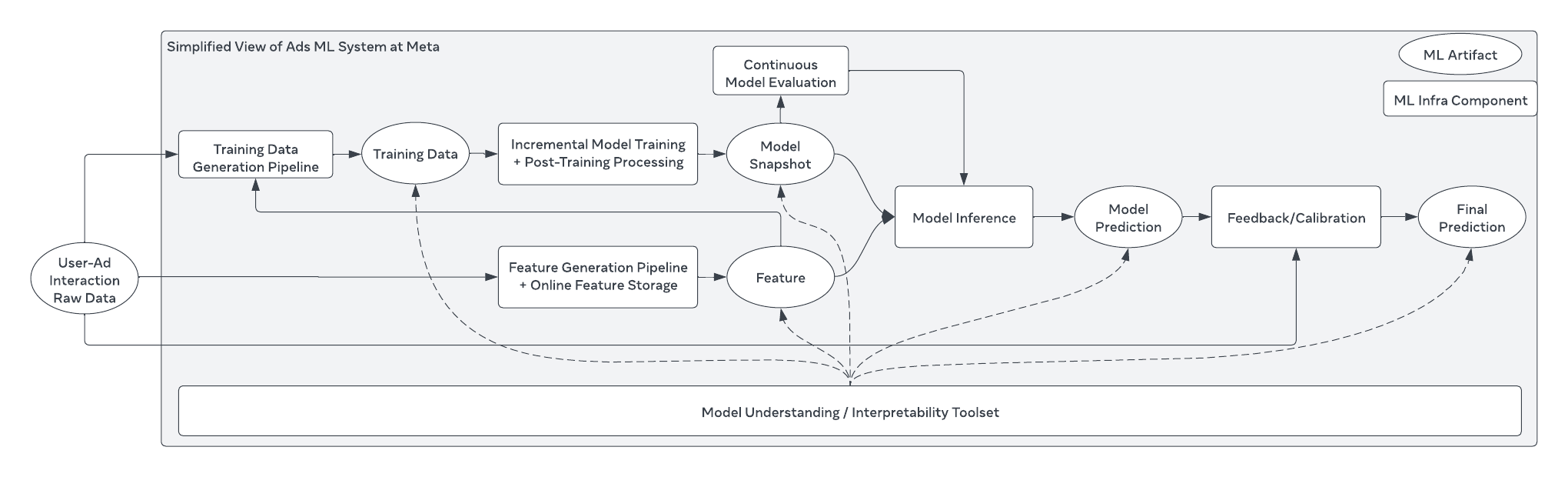

- Lengthy chain of advanced interactions. The ultimate ML prediction result’s derived from a posh chain of processing and propagation throughout a number of ML techniques. Regression in prediction high quality may very well be traced again to a number of hops upstream within the chain, making it exhausting to diagnose and find stability enhancements per particular ML system.

- Small fluctuations can amplify to grow to be huge impacts. Even small adjustments within the enter information (e.g., options, coaching information, and mannequin hyperparameters) can have a big and unpredictable impression on the ultimate predictions. This poses a significant problem in containing prediction high quality points at explicit ML artifacts (mannequin, characteristic, label), and it requires end-to-end world safety.

- Rising complexity with fast modeling improvements. Meta’s ML applied sciences are evolving rapidly, with more and more bigger and extra advanced fashions and new system architectures. This requires prediction robustness options to evolve on the similar quick tempo.

Meta’s strategy and progress in the direction of prediction robustness

Meta has developed a scientific framework to construct prediction robustness. This framework features a set of prevention guardrails to construct management from outside-in, basic understanding of the problems to realize ML insights, and a set of technical fortifications to ascertain intrinsic robustness.

These three approaches are exercised throughout fashions, options, coaching information, calibration, and interpretability to make sure all attainable points are lined all through the ML ecosystem. With prediction robustness, Meta’s ML techniques are sturdy by design, and any stability points are actively monitored and resolved to make sure clean adverts supply for our customers and advertisers.

Our prediction robustness answer systematically covers all areas of the recommender system – coaching information, options, fashions, calibration, and interpretability.

Mannequin robustness

Mannequin robustness challenges embody mannequin snapshot high quality, mannequin snapshot freshness, and inferencing availability. We use Snapshot Validator, an internal-only real-time, scalable, and low-latency mannequin analysis system, because the prevention guardrail on the standard of each single mannequin snapshot, earlier than it ever serves manufacturing visitors.

Snapshot Validator runs evaluations with holdout datasets on newly-published mannequin snapshots in real-time, and it determines whether or not the brand new snapshot can serve manufacturing visitors. Snapshot Validator has lowered mannequin snapshot corruption by 74% prior to now two years. It has protected >90% of Meta adverts rating fashions in manufacturing with out prolonging Meta’s real-time mannequin refresh.

As well as, Meta engineers constructed new ML methods to enhance the intrinsic robustness of fashions, akin to pruning less-useful modules inside fashions, higher mannequin generalization in opposition to overfitting, more practical quantization algorithms, and guaranteeing mannequin resilience in efficiency even with a small quantity of enter information anomalies. Collectively these methods have improved the adverts ML mannequin stability, making the fashions resilient in opposition to overfitting, loss divergence, and extra.

Function robustness

Function robustness focuses on guaranteeing the standard of ML options throughout protection, information distribution, freshness, and training-inference consistency. As prevention guardrails, sturdy characteristic monitoring techniques have been in manufacturing to constantly detect anomalies on ML options. Because the ML-feature-value distributions can change broadly with non-deterministics sways on mannequin efficiency, the anomaly detection techniques have turned to accommodate the actual visitors and ML prediction patterns for accuracy.

Upon detection, automated preventive measures will kick in to make sure irregular options usually are not utilized in manufacturing. Moreover, a real-time characteristic significance analysis system is constructed to supply basic understanding of the correlation between characteristic high quality and mannequin prediction high quality.

All these options have successfully contained ML characteristic points on protection drop, information corruption, and inconsistency in Meta.

Coaching information robustness

The extensive spectrum of Meta adverts merchandise requires distinct labeling logics for mannequin coaching, which considerably will increase the complexity of labeling. As well as, the info sources for label calculation may very well be unstable, as a result of difficult logging infrastructure and the natural visitors drifts. Devoted training-data-quality techniques have been constructed because the prevention guardrails to detect label drifts over time with excessive accuracy, and swiftly and mechanically mitigate the irregular information adjustments and forestall fashions from studying the affected coaching information.

Moreover, basic understanding of coaching information label consistency has resulted in optimizations in coaching information era for higher mannequin studying.

Calibration robustness

Calibration robustness builds real-time monitoring and auto-mitigation toolsets to ensure that the ultimate prediction is effectively calibrated, which is important for advertiser experiences. The calibration mechanism is technically distinctive as a result of it’s unjoined-data real-time mannequin coaching, and it’s extra delicate to visitors distribution shifts than the joined-data mechanism.

To enhance the soundness and accuracy of calibration Meta has constructed prevention guardrails that include high-precision alert techniques to attenuate problem-detection time, in addition to high-rigor, mechanically orchestrated mitigations to attenuate problem-mitigation time.

ML interpretability

ML interpretability focuses on figuring out the foundation causes of all ML instability points. Hawkeye, our inside AI debugging toolkit, permits engineers at Meta to root-cause tough ML prediction issues. Hawkeye is an end-to-end and streamlined diagnostic expertise protecting all ML artifacts at Meta, and it has lined >80% of adverts ML artifacts. It’s now one of the vital broadly used instruments within the Meta ML engineering group.

Past debugging, ML interpretability invests closely in mannequin inside state understanding – one of the vital advanced and technically difficult areas within the realm of ML stability. There are not any standardized options to this problem, however Meta makes use of mannequin graph tracing, which makes use of mannequin inside states on mannequin activations and neuron significance, to precisely clarify why fashions get corrupted.

Altogether, developments in ML Interpretability have lowered the time to root-cause ML prediction points by 50%, and have considerably boosted the basic understanding of mannequin behaviors.

Bettering rating and productiveness with prediction robustness

Going ahead, we’ll be extending our prediction robustness options to enhance ML rating efficiency, and increase engineering productiveness by accelerating ML developments.

Prediction robustness methods can increase ML efficiency by making fashions extra sturdy intrinsically, with extra steady coaching, much less normalized entropy explosion or loss divergence, extra resilience to information shift, and stronger generalizability. We’ve seen efficiency beneficial properties from making use of robustness methods like gradient clipping and extra sturdy quantization algorithms. And we are going to proceed to determine extra systematic enchancment alternatives with mannequin understanding methods.

As well as, mannequin efficiency will probably be improved with much less staleness and stronger consistency between serving and coaching environments throughout labels, options, inference platform, and extra. We plan to proceed upgrading Meta’s adverts ML companies with stronger ensures of training-serving consistency and extra aggressive staleness SLAs.

Concerning ML improvement productiveness, prediction robustness methods can facilitate mannequin improvement, and enhance day by day operations by lowering the time wanted to handle ML prediction stability points. We’re presently constructing an clever ML diagnostic platform that may leverage the newest ML applied sciences, within the context of prediction robustness, to assist even engineers with little ML data find the foundation explanation for ML stability points inside minutes.

The platform will even consider reliability threat constantly throughout the event lifecycle, minimizing delays in ML improvement resulting from reliability regressions. It should embed reliability into each ML improvement stage, from concept exploration all the way in which to on-line experimentation and remaining launches.

Acknowledgements

We want to thank all of the staff members and the management that contributed to make the Prediction Robustness effort profitable in Meta. Particular because of Adwait Tumbde, Alex Gong, Animesh Dalakoti, Ashish Singh, Ashish Srivastava, Ben Dummitt, Booker Gong, David Serfass, David Thompson, Evan Poon, Girish Vaitheeswaran, Govind Kabra, Haibo Lin, Haoyan Yuan, Igor Lytvynenko, Jie Zheng, Jin Zhu, Jing Chen, Junye Wang, Kapil Gupta, Kestutis Patiejunas, Konark Gill, Lachlan Hillman, Lanlan Liu, Lu Zheng, Maggie Ma, Marios Kokkodis, Namit Gupta, Ngoc Lan Nguyen, Partha Kanuparthy, Pedro Perez de Tejada, Pratibha Udmalpet, Qiming Guo, Ram Vishnampet, Roopa Iyer, Rohit Iyer, Sam Elshamy, Sagar Chordia, Sheng Luo, Shuo Chang, Shupin Mao, Subash Sundaresan, Velavan Trichy, Weifeng Cui, Ximing Chen, Xin Zhao, Yalan Xing, Yiye Lin, Yongjun Xie, Yubin He, Yue Wang, Zewei Jiang, Santanu Kolay, Prabhakar Goyal, Neeraj Bhatia, Sandeep Pandey, Uladzimir Pashkevich, and Matt Steiner.