- How did the Threads iOS staff preserve the app’s efficiency throughout its unimaginable progress?

- Right here’s how Meta’s Threads staff thinks about efficiency, together with the important thing metrics we monitor to maintain the app wholesome.

- We’re additionally diving into some case research that influence publish reliability and navigation latency.

When Meta launched Threads in 2023, it turned the fastest-growing app in historical past, gaining 100 million customers in solely 5 days. The app now has grown to greater than 300 million month-to-month worldwide customers, and its development team has expanded from a small group of scrappy engineers to a corporation with greater than 100 contributors.

Wanting again on the place the Threads iOS app was a yr in the past, a lot has modified: We’ve expanded into Europe, built-in with the Fediverse, launched a public API, developed many new methods for individuals to share what’s occurring of their world, and launched new strategies to search out and skim the most effective content material being produced. We even celebrated our first birthday with get together hats and scratch-off app icons!

To ensure the app is simple and pleasant to make use of—and to scale with a rapidly rising consumer base and improvement staff—it must be performant. Right here’s how we take into consideration efficiency within the Threads iOS app, what we’ve discovered in our first yr, and the way we’ve tackled a couple of of our largest efficiency challenges.

How Threads measures efficiency at scale

Having a quick and performant app is crucial to offering the most effective consumer expertise. We would like Threads to be the most effective place for reside, inventive commentary about what’s taking place now; meaning Threads additionally must be the quickest and most responsive app in its class. If the app doesn’t really feel lightning quick, or if it hangs or drains a telephone’s battery, nobody will wish to use it. Our options should work reliably and fail occasionally it doesn’t matter what type of telephone somebody is utilizing, or how a lot reminiscence their telephone has, or whether or not they’re utilizing Threads someplace that has strong mobile protection or a community that retains dropping out.

Some efficiency points are encountered solely hardly ever however nonetheless will be irritating. Because the iOS app’s utilization grew quickly throughout our first yr after launch, we needed to be taught what the most important ache factors have been for most individuals in addition to the intense efficiency points skilled by a small proportion of customers. We measured how rapidly the app launches, how lengthy it takes to publish a photograph or video, how usually we might expertise crashes, and what number of bug experiences have been filed by individuals.

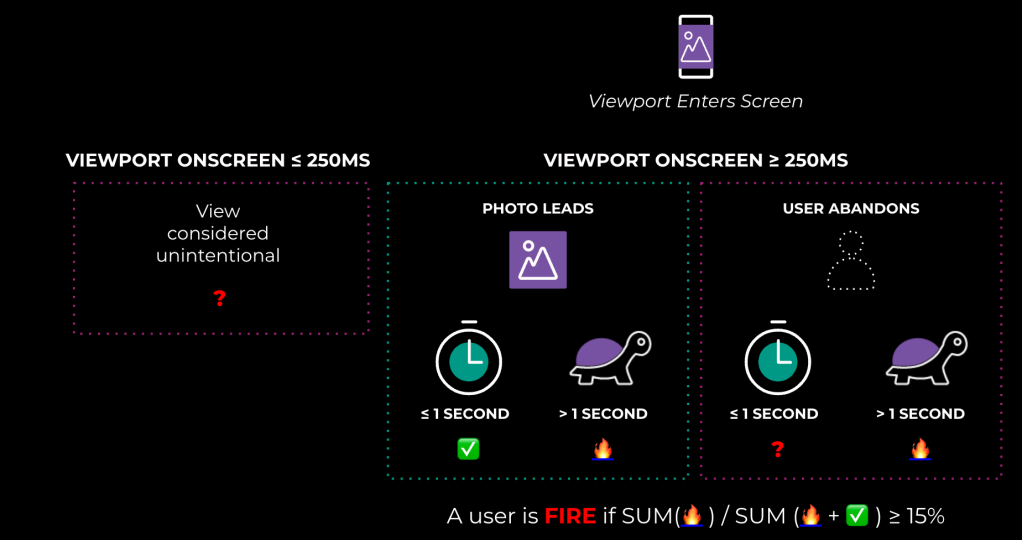

%FIRE: Irritating image-render expertise

Along with all of the textual content updates individuals share, we now have quite a lot of images shared on Threads. When pictures load slowly or in no way, that may trigger somebody to cease utilizing the app. That’s why we monitor an vital metric to alert when there’s a regression in how pictures are loading for our customers. That metric, %FIRE, is the share of people that expertise a irritating image-render expertise, and it’s calculated as proven in Determine 1, under.

Every kind of issues can regress %FIRE, each on the shopper finish and the backend, however not all image-rendering bugs are lined by this metric. For instance, in Threads iOS, we had a bug earlier this yr the place consumer profile images would flicker due to how we have been evaluating view fashions when reusing them. That triggered a irritating consumer expertise, however not one the place customers would contribute to %FIRE.

Time-to-network content material (TTNC)

How briskly the app begins and how briskly we ship a consumer’s feed to them can also be vital. We all know if somebody has to stare at an app launch display screen, exercise spinner, or loading shimmer for too lengthy, they’ll simply shut the app. That is all measured in one thing we name TTNC, or time-to-network content material. Along with having the app begin quick, individuals additionally need us to point out them what’s taking place now, so TTNC measures how briskly we’re capable of load a contemporary, customized feed, not simply cached, domestically saved posts.

The Threads iOS staff has additionally improved the app launch time by holding the app’s binary measurement small. Each time somebody tries to commit code to Threads, they’re alerted if that code change would enhance our app’s binary measurement above a configured threshold. Code that violates our binary measurement coverage isn’t allowed to be merged.

We’re proactive, too: To assist scale back TTNC, we now have spent quite a lot of time since Threads launched eradicating pointless code and graphics belongings from our app bundle, leading to a binary one-quarter the dimensions of Instagram. It doesn’t damage that this can also scale back our iOS app’s construct time, which makes the app extra enjoyable to develop! Threads compiles two instances sooner than Instagram for our non-incremental builds.

Creation-publish success fee (cPSR)

The place %FIRE and TTNC measure how content material is offered to a consumer, we now have one different vital metric: cPSR, the creation-publish success fee. We measure this individually for textual content posts, images, and video printed to Threads. When somebody tries to publish a photograph or video, many issues can stop it from succeeding. Pictures and movies are domestically transcoded into codecs we wish to add, which occurs asynchronously as a part of the publishing course of. They each use much more information and take longer than textual content to add, so there’s extra time for one thing to go improper. A consumer would possibly background the app after they faucet “Publish” with out ready for it to succeed, which on iOS would possibly give us only some seconds to finish the add earlier than we’re terminated by the working system.

Later on this weblog publish, we’ll go into a number of the methods we’re utilizing to enhance cPSR.

Deep dive: Navigation latency

Navigation latency is vital to the consumer expertise as a result of it’s tied to how briskly the app begins and every thing the consumer does as soon as the app has launched. Once we measure navigation latency, we wish to understand how lengthy it takes to complete rendering content material after a consumer navigates to a part of the app. That could possibly be after app begin, both from launching Threads straight in your telephone, or by tapping on a push notification from Threads, or by merely tapping on a publish in your Feed and navigating to the dialog view.

Early in 2024, the Threads Efficiency staff knew we needed to concentrate on a couple of key areas, however which of them? Information from Instagram recommended navigation latency is vital, however Threads is used in a different way than Instagram. Having been obtainable to obtain for less than six months on the time, we knew that to prioritize areas of enchancment we might first should spend a while studying.

Studying from a boundary take a look at

We began by making a boundary take a look at to measure latency, specializing in a couple of key locations that individuals go to once they launch Threads or use the app. A boundary take a look at is one the place we measure excessive ends of a boundary to be taught what the impact is. In our case, we launched a slight little bit of latency when a small proportion of our customers would navigate to a consumer profile, to the conversion view for a publish, or to their exercise feed.

| Latency injection | Every day Lively Customers | Foreground periods | Likes | Dialog views | |

| Exercise: 0.12s Dialog: 0.29s Profile: 0.28s |

In-app navigation | ||||

| Exercise: 0.15s Dialog: 0.36s Profile: 0.35s |

-0.68% | ||||

| Exercise: 0.19s Dialog: 0.54s Profile: 0.53s |

-0.54% | -0.81% | |||

| Exercise: 0.12s Dialog: 0.29s Profile: 0.28s |

App launch | -0.37% | -0.67% | -1.63% | |

| Exercise: 0.15s Dialog: 0.36s Profile: 0.35s |

-0.67% | -2.55% | |||

| Exercise: 0.19s Dialog: 0.54s Profile: 0.53s |

-0.52% | -0.65% |

Desk 1: Navigation latency boundary take a look at outcomes.

This latency would permit us to extrapolate what the impact could be if we equally improved how we delivered content material to these views.

We already had strong analytics logging, however we didn’t have the flexibility to distinguish between navigation to those views from a chilly app launch and from throughout the app. After including that, we injected latency into three buckets, every with slight variability relying on floor.

We discovered that iOS customers don’t tolerate quite a lot of latency. The extra we added, the much less usually they might launch the app and the much less time they might keep in it. With the smallest latency injection, the influence was small or negligible for some views, however the largest injections had unfavourable results throughout the board. Individuals would learn fewer posts, publish much less usually themselves, and generally work together much less with the app. Keep in mind, we weren’t injecting latency into the core feed, both; simply into the profile, permalink, and exercise.

Measuring navigation latency with SLATE

Navigation latency is tough to measure constantly. In case you have a giant app that does many alternative issues, you need to have a constant manner of “beginning” your timer, measuring time to render a view throughout many alternative surfaces with various kinds of content material and conduct, and at last “stopping” your timer. Additionally, you will have to concentrate on error states and empty views, which must be thought-about terminal states. There will be many permutations and customized implementations throughout all of an app’s surfaces.

To resolve this drawback and measure navigation latency constantly, we developed a brand new instrument we name SLATE: the “Systemic LATEncy” logger. It provides us the flexibility to look at occasions that set off a brand new navigation when the consumer interface (UI) is being constructed, when exercise spinners or shimmers are displayed, when content material is displayed from the community, and when a consumer sees an error situation. It’s applied utilizing a set of widespread parts which might be the muse for lots of our UI and a system that measures efficiency by setting “markers” in code for particular occasions. Usually these markers are created with a particular objective in thoughts. The wonderful thing about SLATE is that it robotically creates these markers for a developer, so long as they’re utilizing widespread parts. This makes the system extremely scalable and maintainable in a really giant code base similar to Threads or Instagram.

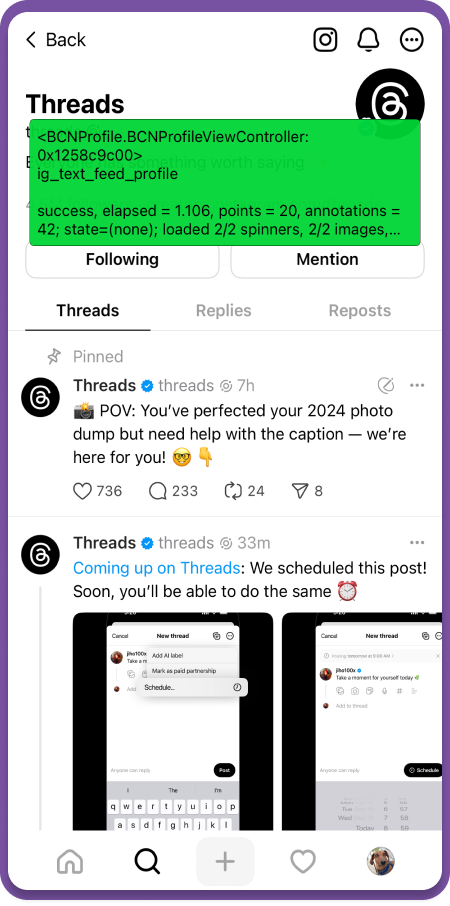

When our iOS builders are creating a brand new characteristic, it’s straightforward to see if it has an impact on navigation latency. Anybody can allow the SLATE debugger (depicted in Picture 1, under) proper within the inside construct of our app, and it’s straightforward to create a dashboard to allow them to get a report about how their code is working in manufacturing.

Case research: Utilizing SLATE to validate GraphQL adoption

During the last yr, each Instagram and Threads have been adopting GraphQL for community requests. Although Meta created GraphQL again in 2012, we constructed Instagram on a community stack primarily based on REST, so Threads for iOS and Android initially inherited that technical legacy.

When Threads for Web was developed, it was a contemporary code base constructed on the trendy GraphQL customary as a substitute of REST. Whereas this was nice for internet, it meant that new options delivered to each internet and iOS/Android needed to be written twice: as soon as to assist the GraphQL endpoints and as soon as for REST. We needed to maneuver new improvement to GraphQL, however as a result of the implementation was unproven for Threads, we first wanted to measure and ensure it was able to be adopted. We anticipated GraphQL to end in much less information that will must be moved over the community, however to parse and retailer the information, the infrastructure to assist it would introduce further latency.

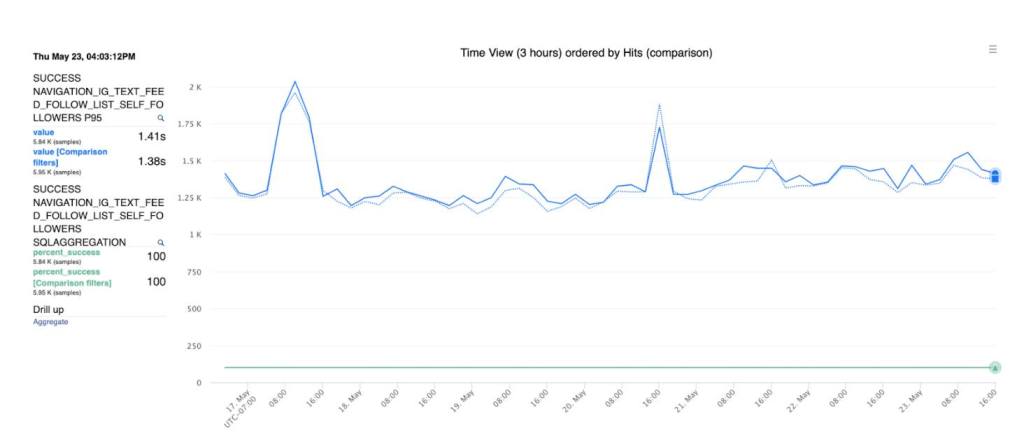

We determined to run a take a look at the place we took one in all our views and applied its community supply code utilizing GraphQL. Then we might run the REST and GraphQL implementations facet by facet and examine the outcomes. We opted to run the take a look at for the “consumer record” views that energy Followers and Following lists and decide if the brand new code that delivered and parsed GraphQL responses was a minimum of as quick because the legacy REST code.

This was straightforward to do utilizing Swift. We created an abstraction that extracted the prevailing API right into a protocol that each the REST and GraphQL code might use; then when the code could be known as, a manufacturing unit methodology generated the suitable supplier.

As soon as the code was working, we wanted to measure the influence on the end-to-end latency of fetching outcomes from the community and rendering the content material on display screen. SLATE to the rescue! Utilizing SLATE’s efficiency markers, we might simply examine latency information for every of the totally different consumer view community implementations.

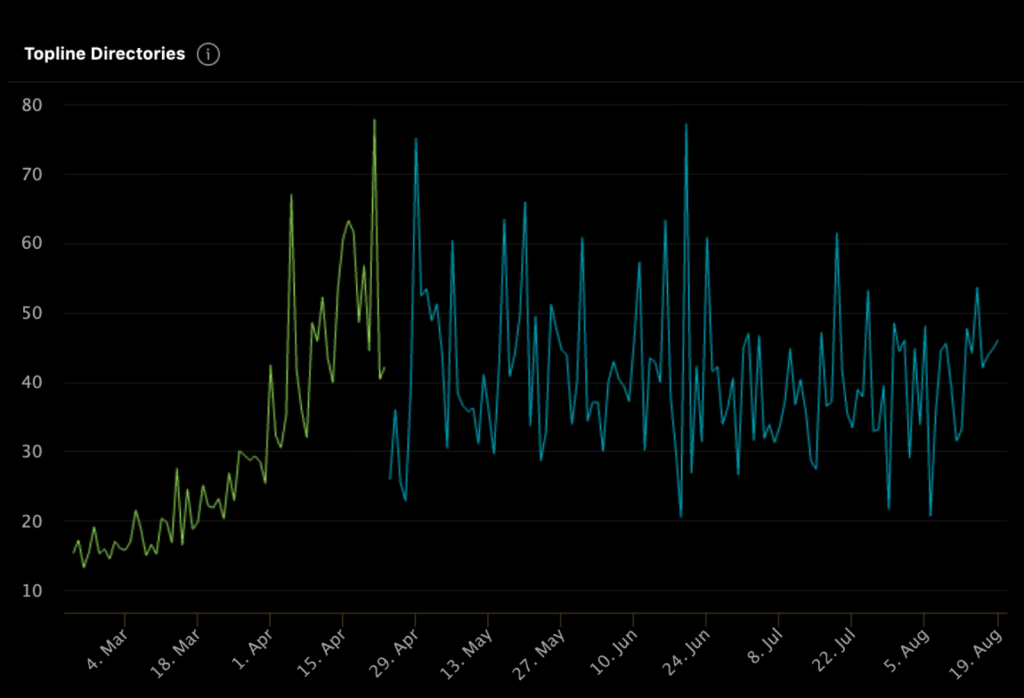

Under is an instance graph of the latency information (p95) for when a consumer views the record of their followers. The blue line compares the REST and GraphQL latency information, that are very related. We noticed related outcomes throughout all of the totally different views, which gave the Threads iOS staff confidence to undertake GraphQL for all new endpoints.

Deep dive: Publish reliability and latency

As talked about beforehand, cPSR is without doubt one of the prime metrics we’re attempting to enhance on Threads, as a result of if individuals can’t reliably publish what they need, they’ll have a horrible consumer expertise. We additionally know from studying user-submitted bug experiences that posting is usually a supply of frustration for individuals.

Let’s dive into two options added to Threads iOS that method bettering the posting expertise in very other ways: Drafts, and decreasing the perceived latency of textual content posts.

Drafts

In early 2024, Threads launched fundamental saving of drafts on iOS and Android. Along with being one in all our most user-requested options, Drafts gives resiliency to surprising failures similar to dangerous community connectivity. user-filed bug experiences, we had seen that the highest concern was being unable to publish. Usually customers didn’t know why they couldn’t publish. We knew a draft characteristic would assist with a few of these considerations.

These consumer bug experiences have been used to measure the success of Drafts. Drafts doesn’t straight transfer cPSR, which measures the reliability of posting in a single session, however we theorized it would end in both extra posts being created or much less total consumer frustration with posting. We launched Drafts to a small group of individuals and in contrast the variety of subsequent bug experiences associated to posting they submitted in comparison with experiences from individuals who didn’t have Drafts. We found that 26 % fewer individuals submitted bug experiences about posting if that they had Drafts. The characteristic was clearly making a distinction.

We rapidly adopted up with a small however obligatory enchancment. Beforehand, if a consumer ran right into a community problem whereas posting, they might be requested in the event that they needed to retry or discard their publish, however got no choice to reserve it as a draft. This meant lots of people who couldn’t ship have been dropping their publish, which was irritating. Sadly, measuring the influence of this resiliency characteristic was additionally tough as a result of not many individuals bumped into it.

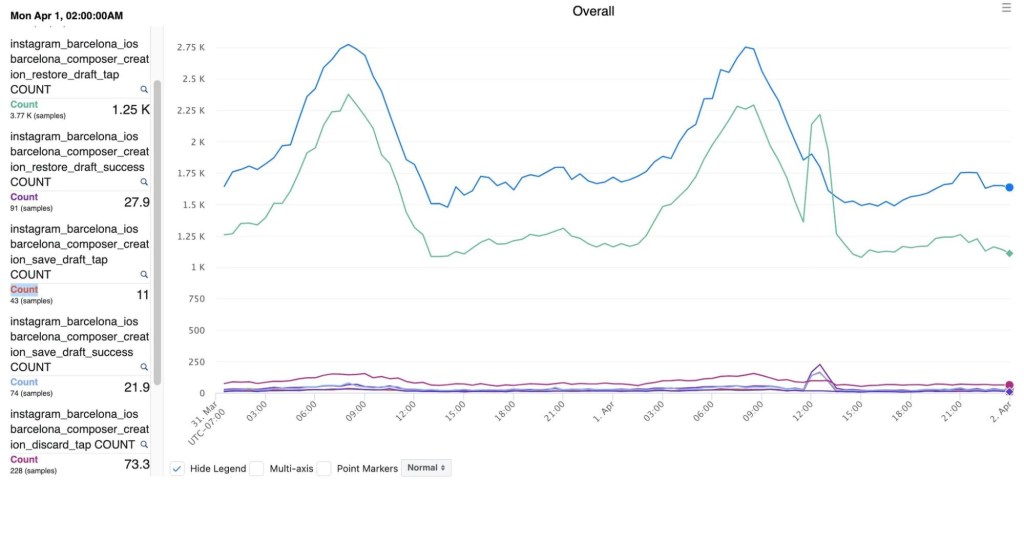

Then, a shocking factor occurred: A critical bug took down all of Threads for a brief time frame. Although this was dangerous, it had the facet impact of testing a few of our resiliency options, together with Drafts. We noticed an enormous spike in utilization in the course of the quick outage, which confirmed that individuals have been benefiting from with the ability to save their posts if there was a major problem.

You may see in Determine 3 under the spike in Drafts utilization in the course of the outage round midday on March 31.

Minimizing Drafts’ native storage

After Drafts was launched to the general public, we found an unlucky bug: The typical quantity of storage Threads used was rising dramatically. Individuals on Threads seen, too, and posted quite a lot of complaints about it. A few of these individuals reported that Threads was taking over many gigabytes of space for storing. Sustaining a low disk footprint helps efficiency, and addressing this bug supplied a possibility to be taught in regards to the influence of extreme disk utilization in Threads.

The offender was Drafts. Within the iOS app, we use PHPickerViewController, launched in iOS 14, to energy the picture and video gallery offered within the Composer.

PHPickerViewController is a pleasant part that runs out of course of and gives customers with privateness and security by permitting them to present an app entry to precisely the media they need. When a photograph is chosen, an app receives a URL that factors to the picture asset on the machine. We discovered, nevertheless, that entry to this picture is just non permanent; between periods, Threads would lose permission to learn a picture that had been connected to a draft. As well as, if a consumer deleted a picture from the gallery, it could additionally disappear from a draft, which was not perfect.

The answer was to repeat images and movies to an space within the utility container that was particular to Drafts. Sadly, copied media wasn’t being cleaned up completely, main disk utilization to develop, generally dramatically, over time.

Cleansing up this extreme disk utilization had dramatic ends in areas we didn’t anticipate. App launch turned sooner (-0.35%), our day by day energetic customers grew (+0.21%), and folks posted further authentic content material (+0.76%)—fairly much more.

Blazing quick textual content posts

Just like doing the navigation latency boundary take a look at, the efficiency staff had beforehand measured the influence of latency on textual content replies and knew we needed to enhance them. Along with implementing enhancements to cut back absolute latency, we determined to cut back perceived latency.

A brand new characteristic in Threads’ community stack permits the server to inform a shopper when a posting request has been absolutely acquired, however earlier than it’s been processed and printed. Most failures occur between the cellular shopper and Threads’ servers, so as soon as a request is acquired, it’s very prone to succeed.

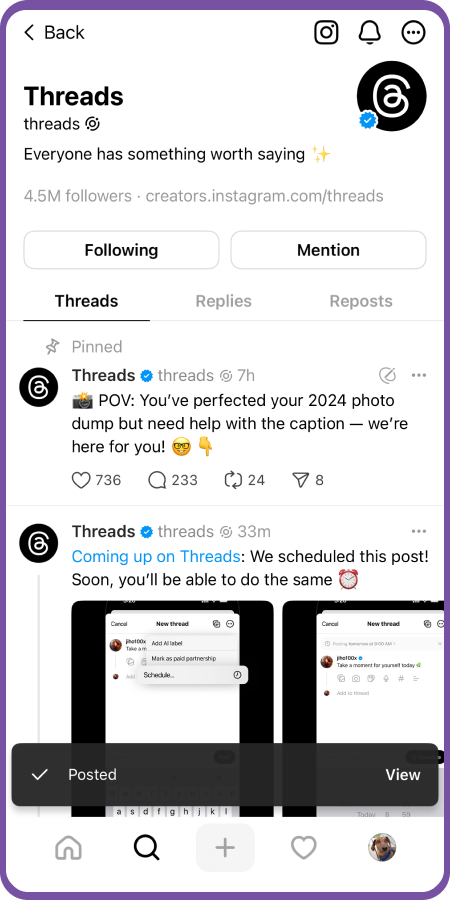

Utilizing the brand new server-acknowledgement callback, the iOS shopper might now current the “Posted” toast when a publish request was acquired, however earlier than it was absolutely created within the backend. It will seem as if textual content posts have been publishing a little bit sooner. The result’s a greater consumer expertise that makes the app really feel extra conversational.

Adopting Swift Concurrency for extra steady code

Migrating the Threads iOS publishing code from a synchronous mannequin to an asynchronous one additionally revealed the potential for race circumstances. Along with the asynchronous transcoding step talked about beforehand, there have been some new ones associated to administration of the add duties and media metadata. We seen some mysterious malformed payloads that turned up solely often in our analytics and dashboards. Working at huge scale tends to show up some uncommon edge instances that may have unfavourable penalties on efficiency metrics and provides individuals a nasty consumer expertise.

Top-of-the-line issues about working within the Threads code base is that it’s principally in Swift. A number of the publishing code was written in Goal-C, although. Whereas Goal-C has quite a lot of advantages, Swift’s sturdy data-race protections and kind security could be an enchancment, so we determined emigrate Threads’ publishing code to Swift.

iOS groups all through Meta are adopting Swift’s “full concurrency” in preparation for transferring to Swift 6. On the Threads staff, we’ve been migrating older Swift code and utilizing full concurrency in new frameworks that we’re constructing. Transferring to finish concurrency might be the most important change to iOS improvement since Automated Reference Counting (ARC) was launched manner again in iOS 4. If you undertake full concurrency, Swift does an excellent job at stopping pesky information races, similar to some that have been inflicting points with our optimistic uploader. When you haven’t began adopting Swift’s strict concurrency by enabling full concurrency in your code, you would possibly discover that your code is extra steady and fewer vulnerable to hard-to-debug issues brought on by information races.

The way forward for Threads iOS efficiency

As Threads continues to scale in its second yr and past, the iOS app should adapt to satisfy new challenges. As we add new product options, we are going to hold monitoring our time-worn metrics similar to %FIRE, TTNC, and cPSR to ensure the consumer expertise doesn’t degrade. We’re updating the code that delivers posts to you, so that you see content material sooner and expertise fewer loading indicators. We’ll proceed to reap the benefits of essentially the most fashionable language options in Swift, which can make the app extra steady and sooner to construct and cargo into reminiscence. In the meantime, we’re going to iterate and evolve instruments like SLATE that assist us enhance our testing and debug regressions.

As a part of the Threads neighborhood, it’s also possible to contribute to creating the app higher. We talked about earlier that user-submitted bug experiences have been used to determine areas for the event staff to concentrate on and confirm that options like Drafts have been really fixing consumer frustrations. In each Threads and Instagram, you’ll be able to long-press on the House tab or shake your telephone to submit a bug report. We actually do learn them.